3 minute read

3 minute read

1 minute read

TotemBall is a free XBox Live Arcade game, only downloadable by Xbox Live Vision camera owners.

The game requires the player to stand in front of the camera and wave their hands around to control the on-screen action. Anyone who has played the game for more than 2 minutes will know that your arms begin to ache very quickly, stopping you from playing the game for very long.

My solution is JazzHands, virtual hands for your computer. Simply point the LiveVision camera at the JazzHands window and control TotemBall using the cursor keys.

1 minute read

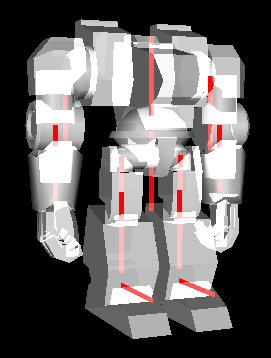

The work on AMBERs game logic and teams system has turned up a little nugget of eye candy - Team colours.

Using dynamically generated textures, I’ve been able to keep a single base texture for each model, but modify it at run time to apply a team colour and a joint colour.

2 minute read

I have written a few wrappers and objects for OpenGL during the development of AMBER. The first was a general OpenGL wrapper. This used the top left of the screen as the origin for orthographic projections, mainly because I was used to it being there and it avoided problems with mouse coordinates.

Next came the CBFG text class. This was intended to be dropped into other people’s code, so I used a bottom left origin as seems to be standard in OpenGL.

More recently I’ve incorporated my GUI class into the GL wrapper and bolted my text object onto it to provide textboxes, lists, etc…

This is where the problems began, the GL class and the GUI object use the top left as origin and the text class uses the bottom, so all the text in the controls was upside down.

I’ve rewritten the GL class and GUI class to use the bottom as the origin as this is the OpenGL way. However, it seems strange that every other system I’ve used treats the top of the screen as the Y axis origin. Why does GL have to be different? Is there any gain to be had by doing this? Or could I just fudge the projection matrix to use the top as the origin?

Ah well, it’s done now, I may re-visit this during optimisation, but that’s a long way off.

1 minute read

Can’t seem to stay away from the coding contests.

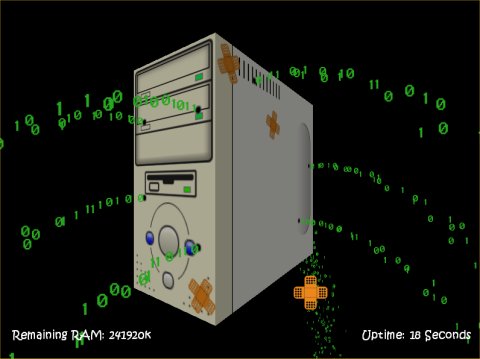

GPWiki again, the topic was ‘Particles’. So I knocked up a little game called ‘Leaky Apps’.

1 minute read

A small break for a family holiday and some work commitments. Also, I entered the GPWiki.org coding contest last week with a pathfinding demo.

2 minute read

Some progress on the in-game model handling….

The previous in-game model format was MD3. The format uses snapshots of the mesh for each frame, creating huge model files which require the vertex normals for each frame to be calculated at startup. The normal calculations alone took 30-45 seconds per model. The files were over 1Mb each and had a huge memory footprint. It also made the workflow for modelling a nightmare and very much a one way process.

2 minute read

GLSandbox is a tool that I developed to help out on my OpenGL projects. I seem to spend a great deal of time tweaking blending parameters and fiddling with alpha testing to get things to look right.